In April of 2007, Rand Fishkin of SEOMoz.org published the results of a survey of top SEOs regarding what they believed were the top search engine ranking factors. It was pretty interesting stuff, but something happened recently that reminded me that all of these top SEOs totally missed the boat – none of them mentioned the most important search engine ranking factor of them all – QA.

QA (aka “Quality Assurance”) is the process by which you check all of the work done on your website before you release it to the rest of the world, including the search engines. QA is hard enough to do on “normal” feature releases, but for some reason whenever there is SEO involved it gets even harder, usually because the team has not adopted SEO as a part of its everyday process. Screwing up QA on SEO features can mean losing a lot if not all of your traffic in the flick of a switch.

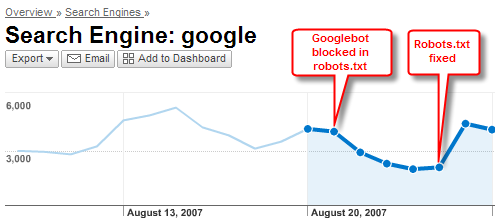

Here’s a perfect example: I noticed one of my clients’ Google traffic take an almost 50% dive from two days before and asked what they had done to the site. They told me “nothing”, but when I looked at the robots.txt file, I saw they had rewritten it in such a way that it blocked Google from crawling the entire site. I quickly alerted them and they corrected the problem, but had I not been looking, they probably would have lost a lot more traffic for a lot longer. The problem was that some of the developers were not following the SEO QA process I had given them. As the CEO later told me, this could have been “an enormous tragedy” for their business.

So say what you will about title tags and external links, I say SEO QA is the #1 search engine ranking factor.

Here’s my standard SEO QA checklist for your enjoyment. Feel free to add more SEO QA ideas in the comments section.

The Local SEO Guide SEO QA Checklist

The following items should be tested before every new release:

- For dynamic pages does each page type have a unique Title, Meta Description and Meta Keywords tag formula?

- For static pages does each page have a unique Title, Meta Description and Meta Keywords tag?

- Inspect your robots.txt file and make sure that only URLs that you don’t want the search engines to see are listed. Examples of pages you probably don’t want indexed include login, email to a friend, printer friendly pages, most footer pages, etc. If you see “Disallow: /”, this means you are blocking all robots from crawling the entire site. This is not good.

- Run a report of all dynamic page types that have a “noindex” tag and confirm that only page types that you don’t want the search engines to see have this tag.

- Test all URL redirects. Make sure the following redirects are in place

- Non-www version of every URL 301s to www version (or vice-versa)

- URLs that end in / 301 to version that has no / (or vice-versa)

- All mixed case URLs 301 to lowercase versions

- Test version subdomains (e.g. alpha.site.com) either 301 to root domain or else are password protected.

- Make sure any URLs that are being eliminated 301 to the new version of the URL or if there is no new version that they 301 redirect to the root domain or a related directory on the site.

- If you are using a sitemaps xml file to update Google, Yahoo & MSN sitemaps has the xml file been updated to reflect the new changes?

- Run a crawler against your site such as Linkscan to make sure that your pages are not delivering error codes and to see if there are any chain redirects (e.g. 301 to 301 to 301). Avoid chain redirects if possible.

- Create a list of items that have changed that could affect SEO to help quickly diagnose any issues that may result from the new release. Typical items include:

- Addition of or reduction of links on a page

- Rewritten page copy and meta information

- Addition of new pages

- Eliminated URLs

- Redirected URLs

50 Response Comments

Good post.

I also added a few more factors that I check for, in a list at: http://sphinn.com/story/8761/

Great list, Andrew. And great job helping your client avoid a potential Internet disaster!

It is necessary to have unique meta descriptions in every pages? And the links how many links really to a certain… And how can we position ourselves int the ranking…..

Hi

I am very confused.I am in India and my client is in USA.When I search the cli8ent site on google.com and yahoo.com on NASCAR clothes keyword that show on first page.But my client said to me that that is not on first page.I want to know the reason why we see it on different location.What i do for this that will show on same location in INDIA andUSA.How I promote it that will locate on same location on google.com and yahoo.com in India and USA

Can u help me for find out the reason of this diofference?

Thanks

Hi Sunita,

The short answer is that you probably will need relevant inbound links from both US and Indian sites, but it could be more complicated than that depending on what’s going on with your site.

A

As far as i am aware of Local SEO, the most counted factor is whois information and IP from where you upload the pages.

Excellent list there, I am glad I came across it as I had done a 301 redirect only for http:// to http://www but noticed there are several I could utilise.

I use a fantastic tool http://www.websitegrader.com to help me with my SEO. Its by the guys at Hubspot and was the original reason for me doing the 301 redirect.

Great list, Andrew. And great job helping your client avoid a potential Internet disaster!

Andrew,

GREAT comment. I’ve worked in the high tech industry for years and spent quite a few at a company called National Instruments. They sell hardware and software products for the test and measurement industry. I can tell you that QA is absolutely critical to the success of a software, or any other kind of product.

I guess I never thought of SEO which is a service as a product that requires QA, but you are absolutely right. there are so many fly-by-night companies offering SEO (ask me how I know) that having a QA program in place would really help differentiate the tops from the nots.

Mendy

Hi Andrew,

I just started my first wordpress blog and I’m not really sure if it matches up to the checklist you provided (which seems to be a great yardstick with which to measure a site’s SEO).

Do you know if WordPress automatically 301s all www to non-www’s?

Hi David,

I am pretty sure version 2.6 does. There are also some plugins that will do it for you. Here’s one:

http://urbangiraffe.com/plugins/redirection/

Eva,

Google can show different results based on your location. The short answer is that you are accessing different servers known as “data centers” depending on where you are located. Google also tries to deliver more locally relevant content based on your location as well.

A

Andrew:

Good article, even as we approach the one year anniversary of it’s posting the information is still valuable and relevant. Am interested to see how universal search will mix with local, and how the addition of similar variables will make for much more complex ranking algorithms and SEO.

Muchas gracias d!

No matter what the algorithm is, seo qa will always be at the top of the search engine ranking factors list.

Great checklist and gave me a good resource to build upon as I work in my local community as a local search marketer.

Interesting that I should find this article. Just a couple weeks ago after having a guy upgrade my WP blog to the latest version my traffic started to tank. After a couple days I realized I had a robots.txt blocking all engines. I fixed it and my rankings/traffic jumped to normal within a couple days – just like in your graph.

Google giveth and Google taketh away 😉

I do believe that every page of your site, whether it is static or dynamic, should have its own specific Title and Description. As for the keyword meta tag, I don’t use it at all seems more like a waste of time for me.

This is the first time I have ever heard anyone mention anything like QA. I also follow QA but only after learning the hard way many times. I now know that taking short cuts is simply just too costly.

Good article!

Hi Andrew

Good list, shame bigger teams don’t use code reviews and then run it through their team lead prior to other testing.

Would cut down on a lot of silly code being put to QA, Live.

It may take a little longer but the quality is there and less come backs.

But isn’t the saying “There is never enough time to get the job done, but plenty of time to go back and fix things?” heheh.

I’m sure most of us have been there 😉

I am there right now Kev!

Thanks for the list

i believe most of the webmasters dont care about the Unique metatags for each page but as writen in the post it is one of the important factor hich helps you in SERPS

Can you tell me actually what elements effect in keyword density? What’s the average best keyword density range?

This is a very informative article. Local Search Marketing is becoming a big thing now-a-days and it’s so important to follow the “chain of command” when it pertains to SEO and Local SEO.

Andrew, Nice job. Half the work is being brilliant enough to create a check list like this one.

As often as the “rules” change – any updates on this list? Or should this info be viewed as “current”?

THANKS!

Very good and informative post, Andrew… SEO for local business owners is going to be sought after more this year. Nice tip about inspecting your robots.txt file 😉

Good post Andrew, I recently had a problem with the robot file that was on hosting account with multiple add on domains causing indexing problems with the add on domains. I am always on the look out to avoid any problems with robot files as they can have a big impact.

Thanks – Aaron

Useful info thanks for sharing.. 🙂

Thanks for share about ‘#1 Search Engine Ranking Factor: SEO QA‘,… it’s useful information,.

I read this post and couldn’t believe it ………until I saw the date . It’s years old

sir

i want to ask why my listing on google places is not able to get a positiion on the first page of google places despite of my listing geting so many reviews.on the other hand some listing ongoogle places stay at the first page despite of them geting no reviews and they are on the first page for months.

reply soon

vikas

Good one Andrew! I’m not sure that QA would actually be considered a true “search engine ranking factor” though – although I definately agree with you on the importance.

I started off in Search Engine Land today and somehow stumbled across this post.

I have experienced loss of traffic due to absence of QA by webmasters. I agree that QA is probably the most important aspect of SEO but would go further and say it is the most important aspect of doing anything well. It always comes down to the execution. The devil is in the detail, as they say. To that extent, I’m with Robert in that I don’t see QA as a search engine ranking factor but an essential factor for success in any field.

Potato/Potahto Ewan. Best of luck fixing your problem!

Theres some really helpful info here for people involved in local seo I also think that the QA side of things is an ongoing process and you would be suprised how many companies still have no analytics set up

Excellent post Andrew. I am constantly trying to hammer these basics into the heads of my friends and clients. So many web businesses look at SEO as a panecea, thinking that just by applying it to their site it will magically make traffic appear. Far too often do I see businesses making the very simple and correctible errors you talk about here and their traffic suffers, simply from lack of proper QA.

Glad you found it useful Dorothy. This post is an oldie but a goodie.

I couldn’t agree more. It is very difficult to explain to clients that SEO does not exist in a bubble and that small changes that the web developers make when ‘tidying up’ can have a big impact. One of my clients changed the URL of their key landing page and in the process negated 6 months of link building to the old URL. As you say these instances can be corrected if found, but finding them can take time if the only clue that you have is that the number of visits have gone down…

Great post Andrew.

Local search is always growing and we all know that “cracking the google algorithm” is next to impossible. The best way to keep up is to follow “local search” blogs like this one and find what works and what doesn’t. Following blogs is a great way to alleviate some of the trial and error work. But you never know 100% unless your utilizing your own check and balance system; so to speak.

Thanks for the additional information. I will be making some adjustments to a few of my sites. I have a couple of sites with very competitive keywords that are ranking and some with less competitive keywords that seem to be struggling.

I had to learn this lesson the hard way early on. Built tons of links on an early site and had to make changes later to repair the design. Over half the links were lost. I absolutely agree with you on the QA issue… Well said

QA is so important but i do find it difficult to carry out with huge websites that include thousands of pages.

Great Post. I have a question. My goolge place comments/ reviews no longer show up.. they show up on my ad wards as 9 comments but when i click on my google palces page or when i search my company, I have 0.. any ideas? Thank you.

This was very helpfull. I actually printed this out and went thru item by item. One of the biggest mistakes I noticed was “duplicate” meta tags on multiple pages. It’s now fixed and hope this will help mt SERPS!

Great SEO tips thanks did double check all my tags will defiantly make me check any new content or sites before I release!!?

Great info! It’s still valid in 2016! … almost a decade later!

Wow! This article was written 10 years ago and it still holds some relevance as far — on page checklist go!

Make sure you keep it clean. It’s best to hire a professional.

Thank you for the really good overview! It is SO important to go over that type of checklist when trying to optimize a new website on Google I couldn’t agree more. We do it for all of our client websites.